11 min read

CAPTCHA vs. reCAPTCHA: 4 Key Differences

TL;DR: CAPTCHA and reCAPTCHA help filter bots but are not foolproof. Here's a breakdown:

- Technology and Complexity:...

Ad fraud, also known as advertising fraud, is the practice of viewing, clicking, converting, or generating false interactions with any web asset for the sole purpose of earning money directly or indirectly.

Each year, ad fraud costs businesses billions of dollars in lost ad spend. Not only do costs become inflated, but this fraudulent activity skews performance metrics. That makes it challenging to measure the effectiveness of campaigns.

Protect your advertising investments and the integrity of your campaigns with the right ad fraud detection software.

Ad fraud is the practice of viewing, clicking, converting, or generating false interactions with any web asset for the sole purpose of earning money directly or indirectly.

Each year, ad fraud costs businesses billions of dollars in lost ad spend. Not only do costs become inflated, but this fraudulent activity skews performance metrics. That makes it challenging to measure the effectiveness of campaigns.

Protect your advertising investments and the integrity of your campaigns with the right ad fraud detection software.

Ad fraud comes in many different shapes and sizes.

Creating an effective strategy to combat it starts with understanding how ad fraud happens.

Short for “robot,” a bot is an automated software program built to carry out a specific task that most people would find too repetitive or time-consuming. Bot traffic usually mimics normal activity such as clicking an ad, placing items in shopping carts, commenting on social media, or filling out a form. Bot fraud usually involves the use of bot farms or large collectives of bots that act in unison that is usually referred to as a botnet.

Malware is malicious software installed on an unsuspecting user's computer, either desktop or mobile, that is designed to mimic a real user to perform tasks that steal advertising dollars. Tasks like viewing or clicking on ads, watching videos, filling in forms, and other unsavory activities unbeknownst to the user.

Human fraud is the hardest form of fraud to detect as it relies on a network of humans, instead of using bots or malware, to interact with ads and create false transactions. Because actual humans are interacting with the ads, there are no obvious signs that help ad fraud detection tools differentiate normal traffic from human fraud.

SIVT refers to highly advanced and sophisticated techniques used to generate fraudulent traffic. SIVT techniques are far more challenging to detect. This can include advanced bots that closely mimic human traffic, hijacked devices, malware, invalid proxy traffic, cookie manipulation techniques, like cookie stuffing or human fraud farms.

GIVT encompasses relatively simpler and more easily detectable forms of invalid traffic. GIVT refers to bots, crawlers, spiders, or any of the kind of non-human traffic typically routed from a data center IP address. GIVT can also apply to activity-based filtration or browsers that pre-render pages.

NHT, also known as bot traffic, refers to traffic generated by automated bots or scripts rather than real human visitors. Bots can simulate user behavior, such as clicking on ads, visiting websites, or filling out forms, leading to inflated ad metrics and misleading campaign performance. NHT can be a subset of both SIVT and GIVT categories.

Fraud can be indicated by abnormally high CTR that surpasses industry benchmarks. Excessively high CTR may suggest the presence of click fraud, where fraudulent clicks are artificially generated to inflate engagement metrics.

When your leads express confusion about receiving calls or communications from your business when they never filled out a form or expressed interest, it raises concerns about the legitimacy of your lead generation efforts. Such confusion is often an indication of ad fraud.

Ad fraud can disrupt the natural conversion funnel, leading to irregular conversion patterns. Unusual spikes or inconsistencies in conversion rates, especially when not aligned with campaign objectives or user behavior, may be indicative of fraudulent activities.

Ad fraud can result in inconsistent user engagement metrics. For example, if there are variations in time spent on-site, pages visited, or interaction patterns, it may imply the presence of non-human traffic or automated bot activity instead of genuine user engagement.

Unusually high traffic from specific geographic regions or IP addresses could be a sign of ad fraud. Fraudsters may manipulate traffic sources to generate fake impressions or clicks, targeting specific locations to appear more legitimate.

Sudden and unexplained spikes in ad performance metrics, such as impressions, clicks, or conversions, can be an indication of ad fraud. These irregularities may suggest the use of fraudulent techniques like ad stacking or click bots to artificially boost campaign results.

This is when a fraudster loads a visitor's browser with cookies from multiple websites. This can make it appear as though the user has visited those websites, even if they have not. This can be used to inflate the number of impressions an ad receives.

In ad stacking, multiple ads are layered on top of each other in a web page or mobile app. While only the top ad is visible, all the ads below register impressions. Ad fraudsters exploit this technique to create false impressions and artificially inflate ad performance metrics.

Click fraud involves the fraudulent manipulation of clicks on online ads. Ad fraudsters may employ bots or scripts to generate fake clicks, artificially inflating click-through rates (CTR) and draining advertising budgets without generating actual user engagement.

Ad injections occur when unauthorized ads are injected into a user's web browser or mobile app without the knowledge or consent of the website or app owner. These injected ads compete with legitimate ads, diverting impressions and revenue away from publishers.

Fraudsters hijack a unique device, mimicking a real user to click on ads, watch videos, fill out forms and steal your personal information.

Any form of web traffic that is derived from a non-human source such as bots and malware. Also known as IVT or invalid traffic.

Ad fraudsters use pixel stuffing to load multiple ads within a single pixel or a tiny ad space. These hidden ads are not visible to users but still register impressions, leading to inflated ad metrics and wasted advertising budgets.

Fraudsters send ads from different locations by masking them to look like they came from the location the advertiser targeted.

Domain spoofing involves fraudsters misrepresenting the source of an ad by manipulating the ad's URL. They disguise their fraudulent ads as legitimate ones from reputable websites, tricking advertisers into believing their ads are being displayed on high-quality platforms.

Impression fraud involves generating false impressions by artificially inflating the number of times an ad is served or displayed. This can be achieved through techniques like pixel stuffing, hidden ad placements, or manipulating ad refresh rates.

Click fraud occurs when fraudulent clicks are generated on ads, often by automated bots or click farms. The aim is to increase the number of clicks without genuine user engagement, leading to inflated click-through rates and wasted advertising budgets.

Attribution fraud exploits the attribution models used in advertising campaigns. Fraudsters manipulate the attribution process to wrongfully claim credit for conversions or installs, leading to incorrect allocation of advertising budgets and misleading performance metrics.

Affiliate fraud involves fraudulent practices by affiliates or partner networks. It includes activities like cookie stuffing, where unauthorized cookies are placed on users' devices without their knowledge, leading to falsely attributed conversions and commissions.

Ad fraudsters use bot traffic to simulate user interactions and engagement with ads. Bots can generate fake clicks, impressions, or conversions, creating the illusion of genuine user activity and deceiving advertisers.

Lead generation fraud refers to the deceptive practices employed to generate false or low-quality leads in marketing campaigns. It involves activities such as submitting fabricated or irrelevant information, utilizing automated bots or scripts, or misleading users into providing their details without genuine intent.

Ad fraud leads to a significant waste of advertising budgets. Fraudulent activities such as fake impressions, clicks, and conversions drain advertisers' funds without providing any actual value or meaningful engagement with real users.

Ad fraud skews campaign performance metrics, leading to misleading results and reduced return on investment (ROI). Advertisers may struggle to accurately assess the effectiveness of their campaigns, make data-driven decisions, and optimize their strategies for better outcomes.

Ad fraud can harm a brand's reputation. When ads are associated with fraudulent or low-quality placements, it can erode consumer trust and perception of the brand. Negative experiences resulting from ad fraud can impact customer loyalty and hinder long-term brand growth.

Ad fraud strains the trust between advertisers and publishers. When advertisers unknowingly pay for fraudulent traffic or impressions, it can lead to strained relationships with publishers who may be unaware of the fraudulent activities occurring on their platforms.

Ad fraud undermines the overall health and sustainability of the ad tech industry. It affects the confidence of advertisers, leading to reduced investments and potential disruptions in the ecosystem. Ad fraud also puts legitimate ad tech companies at a disadvantage as they compete with fraudulent entities.

Lead generation fraud refers to the deceptive practices employed to generate false or low-quality leads in marketing campaigns. It involves activities such as submitting fabricated or irrelevant information, utilizing automated bots or scripts, or misleading users into providing their details without genuine intent.

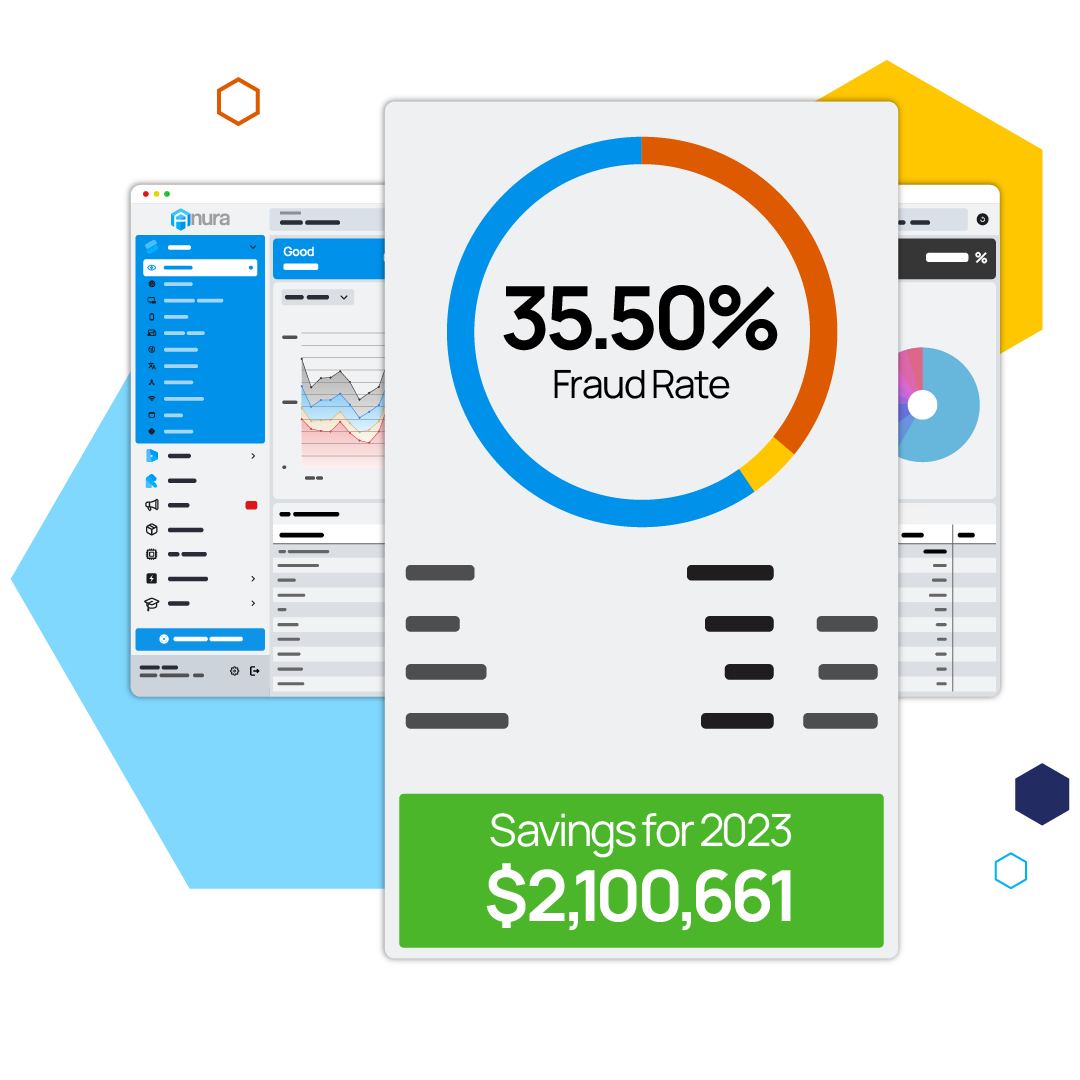

Anura ensures that your ad spend is protected from fraudulent activity, allowing you to focus on reaching real customers and achieving your business goals. Choose an ad fraud detection company that leverages cutting-edge technology to help keep your campaigns secure and efficient.

We love working with Anura and their Team. Rich and his entire group have built a great tool that anyone in marketing should be using. They have great support and know their stuff. I recommend anyone I know to try their tool!

-Brett Kaufman, Successful Media, LLC

Let’s cover some of the frequently asked questions about ad fraud detection to help you protect your precious marketing budget from digital ad fraud.

Ad fraud, also known as advertising fraud, occurs when digital ads receive false or non-genuine interactions designed to benefit fraudsters financially while misleading advertisers. These fake interactions, such as clicks, impressions, conversions, or form submissions, appear legitimate but do not reflect real human engagement or intent.

At its core, ad fraud depletes advertising budgets, skews campaign analytics, and erodes trust in digital marketing channels. Fraudsters exploit vulnerabilities in ad networks, programmatic platforms, and mobile or web traffic to generate revenue from invalid or non-human interactions.

Key forms of ad fraud include:

Why it matters: Ad fraud distorts performance metrics, inflates costs, and can reduce ROI across campaigns. Detecting and preventing ad fraud ensures your ad spend reaches actual users, preserves the integrity of your data, and protects your marketing investment.

How to combat ad fraud: Using advanced detection tools like Anura’s ad fraud protection platform helps advertisers identify bots, invalid traffic, and suspicious interactions in real time, preventing wasted spend and maintaining high-quality campaign performance.

Ad tech fraud refers to fraudulent activity that specifically targets the advertising technology ecosystem, including ad exchanges, networks, and programmatic platforms, by injecting invalid traffic, spoofing placements, and exploiting vulnerabilities in ad deliver systems. Ad tech fraud undermines trust in the broader advertising infrastructure and can inflate costs for advertisers while enriching fraudsters.

Mobile advertising operates across a large number of devices and apps, which opens additional vectors for fraud such as fake installs, click bots, and SDK manipulation. Mobile advertising fraud prevention is essential to protect budget, ensure conversions are genuine, and maintain accurate mobile campaign data. Solutions that identify non-human traffic and fraudulent patterns across mobile environments help block this invalid activity before it hurts performance.

Ad fraud can have severe consequences, including financial losses, inaccurate campaign performance analysis, reduced trust in digital advertising, and wasted marketing efforts. It is crucial to detect and mitigate ad fraud to ensure ad budgets are used effectively and advertising ecosystems remain trustworthy.

Ad fraud detection involves using sophisticated algorithms, machine learning techniques, and data analysis to identify patterns and anomalies indicative of fraudulent activity. It examines various metrics such as click-through rates, conversions, IP addresses, user behavior, and traffic sources to detect fraudulent patterns.

When your marketing efforts are centered around digital advertising, it's essential to minimize the amount of ad fraud throughout your digital marketing. Generating revenue from online advertising is hard enough, so finding ways to trim all inefficiencies must always be a priority.

Using the power of data science, machine learning, and human experts in fraud detection, Anura’s multi-layered approach is unparalleled at discerning quality visitors from fraudsters. Our technology analyzes hundreds of individual visitor data points nearly instantaneously, making it possible for our fraud detection experts to uncover patterns of fraudulent activity. While machine learning is powerful, but it takes the help of data science professionals to prevent false positives. That’s why Anura’s ad fraud detection solution is still very human-involved.

Common types of ad fraud include click fraud (invalid clicks on ads), impression fraud (falsely inflating ad impressions), bot traffic (automated scripts mimicking human behavior), ad stacking (layering multiple ads to generate false impressions), and domain spoofing (misrepresenting ad placements).

Ad fraud can result in wasted ad spend, inflated performance metrics, inaccurate targeting, reduced return on investment (ROI), and compromised brand reputation. By identifying and mitigating ad fraud, you can optimize your campaigns, improve targeting accuracy, and allocate budgets more efficiently.

Ad fraud detection solutions help you proactively identify and prevent fraudulent activities, ensuring your ad campaigns reach real users and genuine engagement. These solutions provide insights into campaign performance, enhance targeting accuracy, save ad spend, and safeguard your brand's reputation.

Ad fraud detection systems employ advanced algorithms and machine learning techniques to achieve high accuracy. However, it's important to note that fraudsters constantly evolve their tactics, so detection systems must continuously adapt to new threats. Reputable ad fraud detection providers strive to stay ahead of fraudulent techniques.

Ad fraud detection systems aim to identify and filter out fraudulent activity while preserving legitimate traffic. However, false positives can occur, flagging some legitimate traffic as potentially fraudulent. Reputable detection systems strike a balance between fraud prevention and minimizing false positives to maintain campaign effectiveness.

Integrating ad fraud detection involves working with a reputable provider who offers an API or SDK that can be implemented within your existing advertising technology stack. The provider can guide you through the integration process and provide support to ensure smooth operation and accurate fraud detection.

When selecting an ad fraud detection solution, consider factors such as accuracy, real-time monitoring capabilities, integration options, reporting and analytics features, customer support, and the provider's reputation. It's essential to evaluate multiple solutions and choose one that aligns with your specific needs and budget.

When your marketing efforts are centered around digital advertising, it's essential to minimize the amount of ad fraud throughout your digital marketing. Generating revenue from online advertising is hard enough, so finding ways to trim all inefficiencies must always be a priority.

Using the power of data science, machine learning, and human experts in fraud detection, Anura’s multi-layered approach is unparalleled at discerning quality visitors from fraudsters. Our technology analyzes hundreds of individual visitor data points nearly instantaneously, making it possible for our fraud detection experts to uncover patterns of fraudulent activity. While machine learning is powerful, but it takes the help of data science professionals to prevent false positives. That’s why Anura’s ad fraud detection solution is still very human-involved.

Google Ads is a powerful platform for reaching new customers, but like any advertising platform, it can be vulnerable to ad fraud. Anura’s sophisticated technology offers Google Ads fraud protection, so you can trust your online advertising on this global platform.

Both mobile and desktop fraud aim to generate fake clicks, impressions, or conversions to steal ad revenue. However, mobile devices provide a treasure trove of data points beyond just clicks and browsing behavior. Mobile ad fraud detection and prevention leverage this distinct data to identify fraudulent activity.

A strong ad fraud detection solution should provide real-time analytics, high accuracy rates, customizable reporting, and integration with your existing ad platforms. It should also detect both bot-driven and human-assisted fraud for complete protection.

Ad fraud detection technology automatically filters out invalid traffic before it’s counted in your analytics. This ensures your ad spend goes toward reaching real users, not fake clicks or impressions from fraudulent sources.

Mar 5, 2026

TL;DR: CAPTCHA and reCAPTCHA help filter bots but are not foolproof. Here's a breakdown:

Mar 4, 2026

TL;DR: You can get a refund for invalid clicks on Google Ads, but only when Google independently verifies that the...

Mar 4, 2026

TL;DR: More than half of all internet traffic in 2026 comes from bots—many of which are harmful to your brand and...

Feb 25, 2026

TL;DR: Ad injection is a sophisticated form of ad fraud that inserts unauthorized ads into legitimate websites,...

Feb 18, 2026

TL;DR:Ad fraud quietly drains marketing budgets while corrupting the data businesses rely on to grow. Beyond wasted...

Feb 12, 2026

TL;DR: Affiliate marketing thrives when you choose the right niche. Health, finance, SaaS, lifestyle, education, and...